SNP calling for GBS data using Tassel pipeline

Introduction

Genotyping-by-sequencing (GBS) is a technique that allows to rapidly detect nucleotide variation across the whole genome. This allows to genotype large number of individuals, simultaneously, at a very low cost as this is a reduced representation of the genome. It can work with or without a reference genome, and easily generates large number of useful information for these individuals at population level.

Dataset

In this tutorial, we will use NAM population GBS data generated by Rodgers-Melnick et al., 2015. This GBS data is collected from 5000 RIL individuals belonging to NAM population (25 diverse maize lines crossed to B73). Originally, SNPs were called using B73 V3 genome and the results were used for various purposes. Since there is more latest version of the genome, we can replicate the same analyses, using Tassel. Note that there is also a tutorial for the same dataset using Stacks instead, accessible here. We will only be usign command-line version of the Tassel for this exercise.

The dataset for this GBS is available on NCBI SRP009896 and there are 91 SRRs associated with it. The metadata for these SRRs are available on CyVerse Data Commons, at this location:

1

/iplant/home/shared/panzea/genotypes/GBS/v27/

Specifically, you will need AllZeaGBSv2.7_publicSamples_metadata20140411.xlsx file present in that directory. This file is essential not only to demultiplex the SRR data you download, but also, if you are interested in doing downstream analyses it will tell the sample pedigree information.

Organization

1

2

3

4

tassel/

├── 0_genome

├── 1_data

└── 2_rundir

The organization is simple in this case as we will run all the steps in single directory.

Programs required

Overview

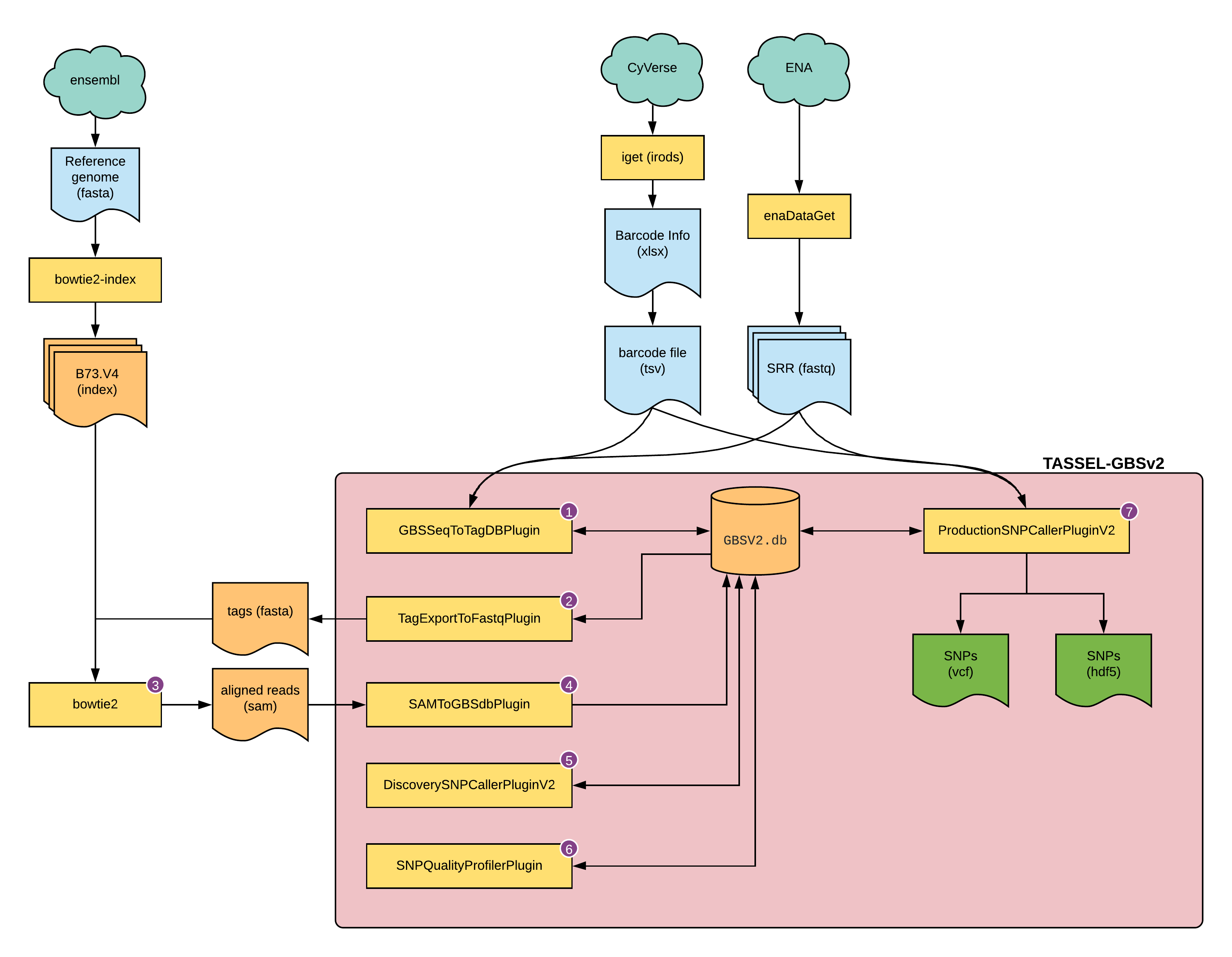

Fig 1: overview of this tutorial

Fig 1: overview of this tutorial

Fastq

First, download the 91 SRR datasets from the SRA database. For this you can use the NCBI-edirect package to obtain the list of SRRs and then enaBrowserTools to download them.

1

2

3

4

5

6

7

8

9

cd tassel/1_data

esearch -db sra -query SRP009896 |\

efetch --format runinfo |\

cut -d , -f 1 |\

awk 'NF>0' |\

grep -v Run > srr.ids

while read line; do

enaDataGet -f fastq -a $line;

done<srr.ids

This will generate 91 folders with single fastq (compressed) file each.

Barcodes

From the spreadsheet downloaded, you will need to extract the required information (flowcell, lane, barcode, and samplename) and generate a simple text file. You can use the SRR id for the flowcell to make the analyses simpler. If you have the same samplename Since there will be samples with same samplename in multiple SRR files, we need to process them separately. The combined, extracted, and cleaned barcode file is provided here in its final form for reference. If you have your own data, it is essential that you create the barcode file in similar format. This file is placed in 2_rundir directory and it will be split into multiple files based on SRR ids.

Before proceeding, we need to make sure that the data input has the right name and extension for Tassel to recognize it as a valid input. Instead of changing the names for the files we downloaded or instead of making a copy of all the files, we will simply softlink them with the right name and provide that as input instead.

1

2

3

4

5

cd 2_rundir

cut -f 1,2 barcodes-gbs-data.tsv | sort | uniq > uniq-names.txt

while read a b; do

ln -s ../../a_data/${a}.fastq.gz ${a}_${b}_fastq.txt.gz;

done<uniq-names.txt

The file barcodes-gbs-data.tsv needs to have header to be correctly read by the Tassel, so we will add that next:

1

2

echo -e "Flowcell\tLane\tBarcode\tFullSampleName" > header

cat header barcodes-gbs-data.tsv >> barcode-for-tassel.tsv

Genome

B73 version 4 from Gramene, download and BWA index:

1

2

3

4

5

cd tassel/0_genome

wget ftp://ftp.gramene.org/pub/gramene/CURRENT_RELEASE/fasta/zea_mays/dna/Zea_mays.B73_RefGen_v4.dna.toplevel.fa.gz

gunzip Zea_mays.B73_RefGen_v4.dna.toplevel.fa.gz

ml bowtie2

bowtie2-build Zea_mays.B73_RefGen_v4.dna.toplevel.fa B73.v4

Tassel

1. GBSSeqToTagDBPlugin

GBSSeqToTagDBPlugin takes fastQ files as input, identifies tags and the taxa in which they appear, and stores this data to a local database. It keeps only good reads having a barcode and a cut site and no N’s in the useful part of the sequence. It trims off the barcodes and truncates sequences that (1) have a second cut site, or (2) read into the common adapter.

script runTassel_0.sh

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

#/bin/bash

ml tassel

cwd=$(pwd)

barcodes=${cwd}/barcode-for-tassel.tsv

db=${cwd}/GBSV2.db

run_pipeline.pl -Xms100G -Xmx180G -fork1 -GBSSeqToTagDBPlugin \

-e ApeKI \

-i ${cwd} \

-db ${db} \

-k ${barcodes} \

-kmerLength 33 \

-minKmerL 11 \

-mnQS 20 \

-mxKmerNum 1000000000 \

-endPlugin \

-runfork1

Create job and submit:

1

2

3

echo "./runTassel_0.sh" > tassel_0.cmds

makeSLURMs.py 1 tassel_0.cmds

sbatch tassel_0_0.sub

The output for this step is GBSV2.db database file.

2. TagExportToFastqPlugin

TagExportToFastqPlugin retrieves distinct tags stored in the database and reformats them to a FASTQ file that can be read by the Bowtie2 or BWA aligner program.

script runTassel_1.sh

1

2

3

4

5

6

7

8

9

10

11

#/bin/bash

ml tassel

cwd=$(pwd)

barcodes=${cwd}/barcode-for-tassel.tsv

db=${cwd}/GBSV2.db

run_pipeline.pl -Xms100G -Xmx180G -fork1 -TagExportToFastqPlugin \

-db ${db} \

-o ${cwd}/tagsForAlign.fa.gz \

-c 1 \

-endPlugin \

-runfork1

Create job and submit:

1

2

3

echo "./runTassel_1.sh" > tassel_1.cmds

makeSLURMs.py 1 tassel_1.cmds

sbatch tassel_1_0.sub

The output form this step is tagsForAlign.fa.gz file (compressed fasta file).

3. Alignment

Any alignment method can be used. Here we use BowTie2

script runBowtie2.sh

1

2

3

4

5

6

7

8

9

10

#/bin/bash

ml bowtie2

cwd=$(pwd)

barcodes=${cwd}/barcode-for-tassel.tsv

db=${cwd}/GBSV2.db

btindex="/work/LAS/mhufford-lab/arnstrm/tassel/0_genome/B73.v4"

bowtie2 -p 36 --very-sensitive -x ${btindex} -U ${cwd}/tagsForAlign.fa.gz -S tagsForAlignFullvs.sam

SAM="tagsForAlignFullvs.sam"

samtools view --threads 36 -b -o ${SAM%.*}.bam ${SAM}

samtools sort -o ${SAM%.*}_sorted.bam -T ${SAM%.*}_temp --threads 36 ${SAM%.*}.bam

Create job and submit:

1

2

3

echo "./runBowtie2.sh" > tassel_2.cmds

makeSLURMs.py 1 tassel_2.cmds

sbatch tassel_2_0.sub

The result for this step is tagsForAlignFullvs.sam, but the same file in bam format as well as sorted bam is also generated.

4. SAMToGBSdbPlugin

SAMToGBSdbPlugin reads a SAM file to determine the potential positions of Tags against the reference genome. The plugin updates the current database with information on tag cut positions. It will throw an error if there are tags found in the SAM file that can not be matched to tags in the database.

script runTassel_3.sh

1

2

3

4

5

6

7

8

9

10

11

12

#/bin/bash

ml tassel

cwd=$(pwd)

barcodes=${cwd}/barcode-for-tassel.tsv

db=${cwd}/GBSV2.db

run_pipeline.pl -Xms100G -Xmx180G -fork1 -SAMToGBSdbPlugin \

-i ${cwd}/tagsForAlignFullvs.sam \

-db ${db} \

-aProp 0.0 \

-aLen 0 \

-endPlugin \

-runfork1

Create job and submit:

1

2

3

echo "./runTassel_3.sh" > tassel_3.cmds

makeSLURMs.py 1 tassel_3.cmds

sbatch tassel_3_0.sub

This step will not generate any output, but you will notice the database (GBSV2.db) has been modified.

5. DiscoverySNPCallerPluginV2

DiscoverySNPCallerPluginV2 takes a GBSv2 database file as input and identifies SNPs from the aligned tags. Tags positioned at the same physical location are aligned against one another, SNPs are called from the aligned tags, and the SNP position and allele data are written to the database.

script runTassel_4.sh Note: change the -sC and -eC for start and end chromosome for which you need stats for.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

#/bin/bash

ml tassel

cwd=$(pwd)

barcodes=${cwd}/barcode-for-tassel.tsv

db=${cwd}/GBSV2.db

run_pipeline.pl -Xms100G -Xmx180G -fork1 -DiscoverySNPCallerPluginV2 \

-db ${db} \

-sC 1 \

-eC 10 \

-mnLCov 0.1 \

-mnMAF 0.01 \

-deleteOldData true \

-endPlugin \

-runfork1

Create job and submit:

1

2

3

echo "./runTassel_4.sh" > tassel_4.cmds

makeSLURMs.py 1 tassel_4.cmds

sbatch tassel_4_0.sub

This step will not generate any output, but you will notice the database (GBSV2.db) has been modified.

6. SNPQualityProfilerPlugin

This plugin scores all discovered SNPs for various coverage, depth and genotypic statistics for a given set of taxa. If no taxa are specified, the plugin will score all taxa currently stored in the data base. If no taxa file is specified, the plugin uses the taxa stored in the database.

script runTassel_5.sh

1

2

3

4

5

6

7

8

9

10

11

#/bin/bash

ml tassel

cwd=$(pwd)

barcodes=${cwd}/barcode-for-tassel.tsv

db=${cwd}/GBSV2.db

run_pipeline.pl -Xms100G -Xmx180G -fork1 -SNPQualityProfilerPlugin \

-db ${db}

-statFile "outputStats.txt" \

-deleteOldData true \

-endPlugin \

-runfork1

Create job and submit:

1

2

3

echo "./runTassel_5.sh" > tassel_5.cmds

makeSLURMs.py 1 tassel_5.cmds

sbatch tassel_5_0.sub

Output for this step is the file outputStats.txt with SNP statistics for chromosome 9 and 10.

7. ProductionSNPCallerPluginV2

This plugin converts data from fastq and keyfile to genotypes, then adds these to a genotype file in VCF or HDF5 format. VCF is the default output. An HDF5 file may be requested by using the suffix “.h5” on the file used in the output file parameter. Merging of samples to the same HDF5 output file may be accomplished by using the –ko option described below.

script runTassel_6.sh

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

#!/bin/bash

ml tassel

cwd=$(pwd)

barcodes=${cwd}/barcode-for-tassel.tsv

db=${cwd}/GBSV2.db

run_pipeline.pl -Xms100G -Xmx180G -fork1 -ProductionSNPCallerPluginV2 \

-db ${db} \

-e ApeKI \

-i ${cwd} \

-k ${barcodes} \

-kmerLength 33 \

-o ${cwd}/tassel-gbs.vcf \

-endPlugin \

-runfork1

run_pipeline.pl -Xms100G -Xmx180G -fork1 -ProductionSNPCallerPluginV2 \

-db ${db} \

-e ApeKI \

-i ${cwd} \

-k ${barcodes} \

-kmerLength 33 \

-o ${cwd}/tassel-gbs.h5 \

-endPlugin \

-runfork1

Create job and submit:

1

2

3

echo "./runTassel_6.sh" > tassel_6.cmds

makeSLURMs.py 1 tassel_6.cmds

sbatch tassel_6_0.sub

The final file tassel-gbs.vcf and tassel-gbs.h5 are the genotypes in VCF and HDF5 format.

Pitfalls:

- You get

java.lang.OutOfMemoryError: GC overhead limit exceedederror:

When you run the run_pipeline.pl script, the default heap size is set to start with -Xms512m for a max of -Xmx1536m. This will definitely be not enough for a data size like this. You should set this to a maximum number that is available for your machine. To set a different value:

1

run_pipeline.pl -Xms100G -Xmx180G

to set starting heap size of 100Gb and max heap size of 180Gb.

If this doesn’t work, then you will most likely have to find a bigger machine with higher memory.